Image Similarity Search

As a society, we are increasingly surrounded with visual data. From social media to streaming video to machine vision, visual datasets are only getting larger and more complex. As such, the tools available to navigate and interact with this data are getting more powerful all the time. At ApertureData, we strive to create a platform that allows our users access to this data in any way they choose. Critical to this is the ability to describe data using arbitrary features, and navigate a dataset using any feature space.

In this example we explore how to use ApertureDB to query for similar images within a dataset using an arbitrary feature space.

We'll cover the following concepts:

Ingest Images and Descriptors into ApertureDB

For the purpose of this example, we'll use the two freely available datasets.

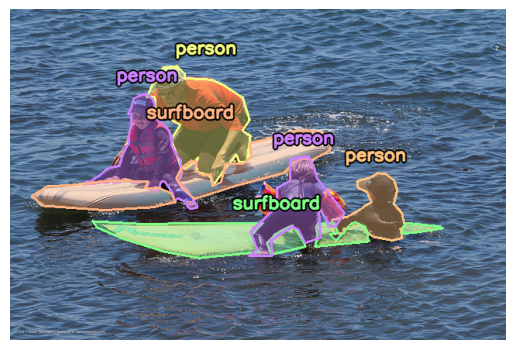

- COCO2017 is a collection of images with annotations describing the objects portrayed in each image (examples below).

- YFCC100M is a collection of 100 million images and videos that includes 4096-dimensional feature vectors generated from each.

In this case, we're looking at a small subset of several thousand images that are common to both.

COCO2017 image with polygon annotations

YFCC100M image with descriptor vector ``[5.716, -12.571, -12.847, 7.851, ... ]``

Here, we show how to ingest the images along with their descriptors.

Before ingesting descriptors, we must first create a descriptor set. The DescriptorSet defines the feature space over which to perform similarity search. When creating a descriptor set, you can choose a distance metric and an indexing engine that will be used when searching for nearest neighbors.

[{

"AddDescriptorSet": {

"name": "coco_descriptors",

"dimensions": 4096,

"engine": "HNSW",

"metric": "L2"

}

}]

Next, we can add images along with

their descriptors. In ApertureDB, objects are connected together like

nodes in a graph. Since descriptors belong to their respective images or

regions of interest in some cases, here we not only add the image and its descriptor, we also

connect them to each other so we can traverse between them at query time. The following query

will ingest a single image along with its descriptor, and it must be accompanied by

a blob array of the form [ <descriptor feature vector>, <encoded image> ].

[{

"AddDescriptor": {

"set": "coco_descriptors",

"properties": {

// A unique identifier matches the descriptor with the image.

"yfcc_id": 6255196340

},

"_ref": 1

}

}, {

"AddImage": {

"format": "jpg",

"properties": {

"id": 397133,

"coco_url": "http://images.cocodataset.org/val2017/000000397133.jpg",

"date_captured": {

"_date": "2013-11-14T17:02:52"

},

"yfcc_id": 6255196340

},

// Add a connection between the image and the descriptor added above.

"connect": {

"class": "has_descriptor",

"ref": 1

}

}

}]

Note: In practice, ingestion is generally more efficient in larger batches. Here's an example showing a similar operation using ApertureDB's python client library, which provides utilities for efficient bulk loading.

Query for Similar Images

Now that the data is loaded, we can explore similar images. Suppose we have the following image along with its descriptor, and we want to find images in our dataset that are similar.

descriptor: ``[-15.469, -14.582, -12.185, -17.752, ...]``

The following query must be passed along with the descriptor for our source image. It finds the 4 closest descriptors in our descriptor set and retrieves their images using the connection we had introduced above (think graph traversal).

[{

"FindDescriptor": {

// Indicates this result will be used by a later command.

"_ref": 1,

// Specify the descriptor set in which to search.

"set": "coco_descriptors",

// This input image happens to be in the dataset already,

// so this will find itself + 4 neighbors.

"k_neighbors": 5,

"distances": true,

"results": {

"list": ["yfcc_id"]

}

}

}, {

"FindImage": {

// Get the images associated with the descriptor results above.

"is_connected_to": {

"ref": 1

},

// Ignore the input image itself.

"constraints": {

"yfcc_id": ["!=", 111501747]

},

"results": {

"list": ["yfcc_id"]

},

// Return the pixel data.

"blobs": true

}

}]

// Must be passed along with the input image descriptor as a binary blob.

This will return a result that looks like the following, along with an array of binary blobs containing the neighbor images below.

[{

"FindDescriptor": {

"entities": [{

"_distance": 0.0,

"yfcc_id": 111501747

}, {

"_distance": 310090.125,

"yfcc_id": 888941469

}, {

"_distance": 330979.0,

"yfcc_id": 558410077

}, {

"_distance": 331103.0,

"yfcc_id": 5767368765

}, {

"_distance": 334098.0625,

"yfcc_id": 5791374148

}],

"returned": 5,

"status": 0

}

}, {

"FindImage": {

"blobs_start": 0,

"entities": [{

"_blob_index": 0,

"yfcc_id": 888941469

}, {

"_blob_index": 1,

"yfcc_id": 558410077

}, {

"_blob_index": 2,

"yfcc_id": 5767368765

}, {

"_blob_index": 3,

"yfcc_id": 5791374148

}],

"returned": 4,

"status": 0

}

}]

Sample results from the query:

This is just the tip of the iceberg when it comes to navigating visual data. This feature can be combined with the rest of ApertureDB's query functionality in any number of ways. We invite you to try it out yourself.